Hypermedia: The New/Old Architecture Set to Revolutionize Web and Mobile Development

Hypermedia is the tech everyone is talking about, yet it remains a bit of a mystery for many. After diving deep, I’m convinced this new/old approach is not just a trend—it’s poised to disrupt how we build applications on both the web and mobile.

The strategic shift towards Hypermedia Driven Applications (HDA) fundamentally alters the economics of development, making internal solutions significantly more viable. As the cost of building web and mobile experiences drops, so too does the traditional calculus of “build vs. buy.

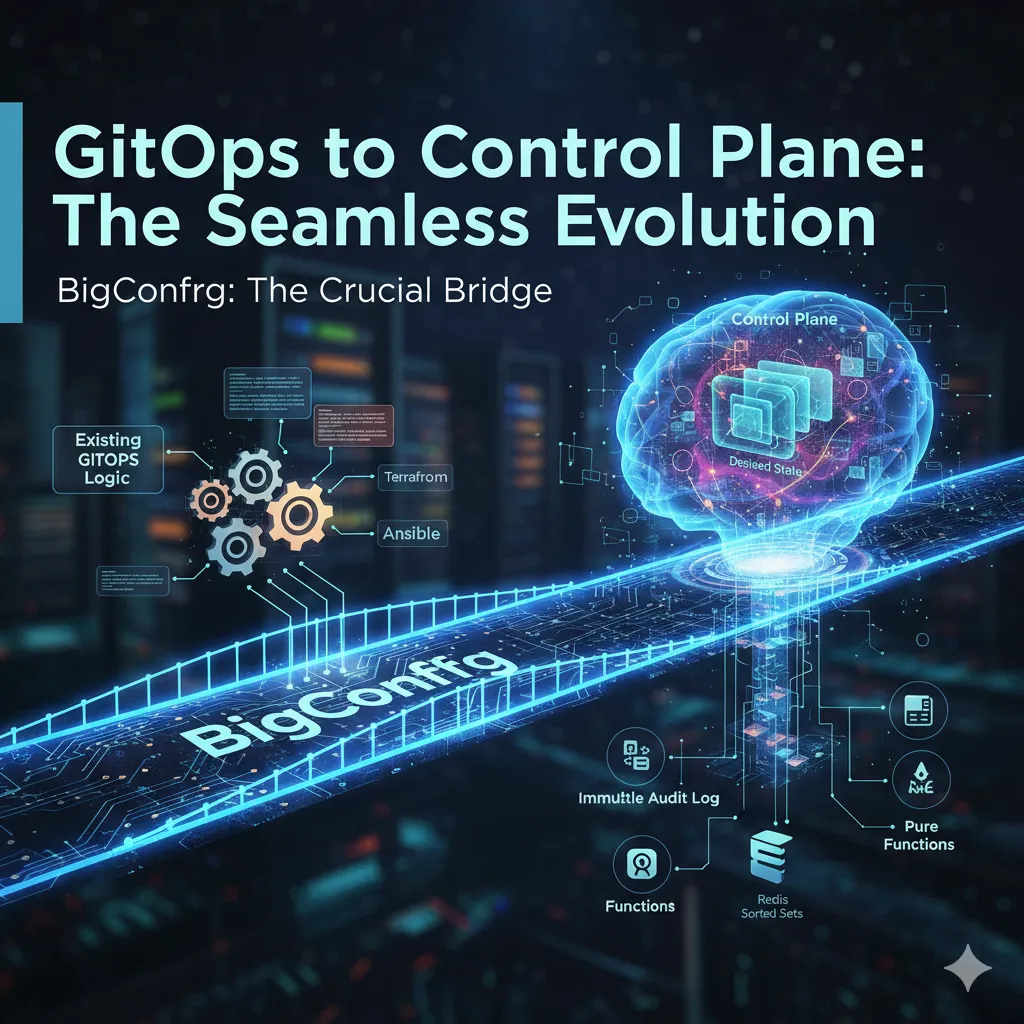

To capitalize on this change, our next major step is integrating Internal Developer Portal capabilities directly into BigConfig. This addition completes BigConfig’s transformation into the essential, comprehensive library for scalable operations, providing core building blocks like configuration as code, workflow orchestration, scaffolding, robust persistency, auditing, control planes, and now, internal developer portals.